Conversations in Collaboration: Gartner’s Tom Coshow on AI Agents and Agentic AIConversations in Collaboration: Gartner’s Tom Coshow on AI Agents and Agentic AI

AI Agents don't just advise or assist human workers. They take action and they need to be purpose-built to complete tasks.

February 11, 2025

Welcome to No Jitter’s Conversations in Collaboration, in which we speak with executives and thought leaders about the key trends across the full stack of enterprise communications technologies.

In this conversation, we spoke with Tom Coshow, a senior director analyst with Gartner's technical service providers division focusing on business process outsourcing customer management (BPO CM). He supports customer experience, CX technology, and executive leadership in in the CMO BPO space.

Tom Coshow, Gartner

In this conversation, Coshow unpacked Gartner’s definitions of two hot topics in generative AI and enterprise communications: AI Agents and Agentic AI. Much of the conversation centered on multiagent systems and the importance of purpose-built AI agents that are good at discrete tasks and then making sure those AI agents communicate with each other in prescribed ways.

No Jitter (NJ): What's the difference between agentic AI and AI agents and the generative AI assistants that are now everywhere in most enterprise products?

Tom Coshow (Coshow): AI agents are autonomous or semiautonomous software entities that use AI techniques to perceive, make decisions, take actions and achieve goals in their digital or physical environments. AI agents have the agency to make a decision about how to execute toward the goal, and they have the tooling to go out and actually take action in a digital environment. The term ‘Agentic AI’ is a little trickier, because you see a lot of Agentic AI definitions that make it seem different than AI agents. But at Gartner, we consider Agentic AI to be an umbrella term that includes AI agents.

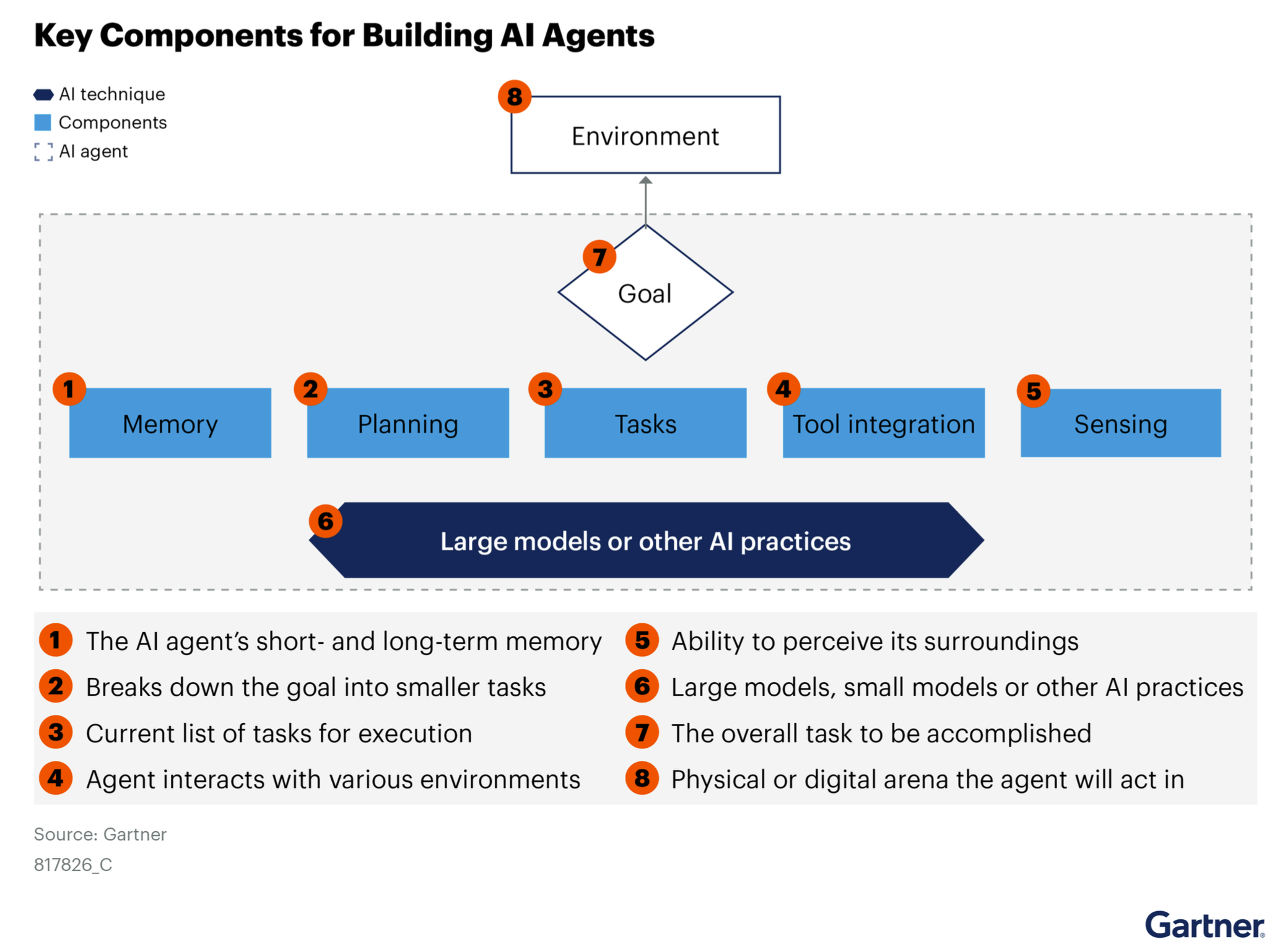

[Referring to the diagram below], this is one agent with its memory and its ability to plan, execute tasks and use tools. It also has a system prompt and works with a large language model [LLM] to go out into the environment to achieve a goal.

One of the reasons [the AI agents] in production are pretty ‘locked down,’ is because when you build a system prompt, it has to be perfect. The [system prompt, which is in every AI agent] must tell the large language model precisely what to do – [the system prompt] tells the agent to decide between four APIs to call, as one example. You don't want it to be wide open, because then the LLM might get confused.

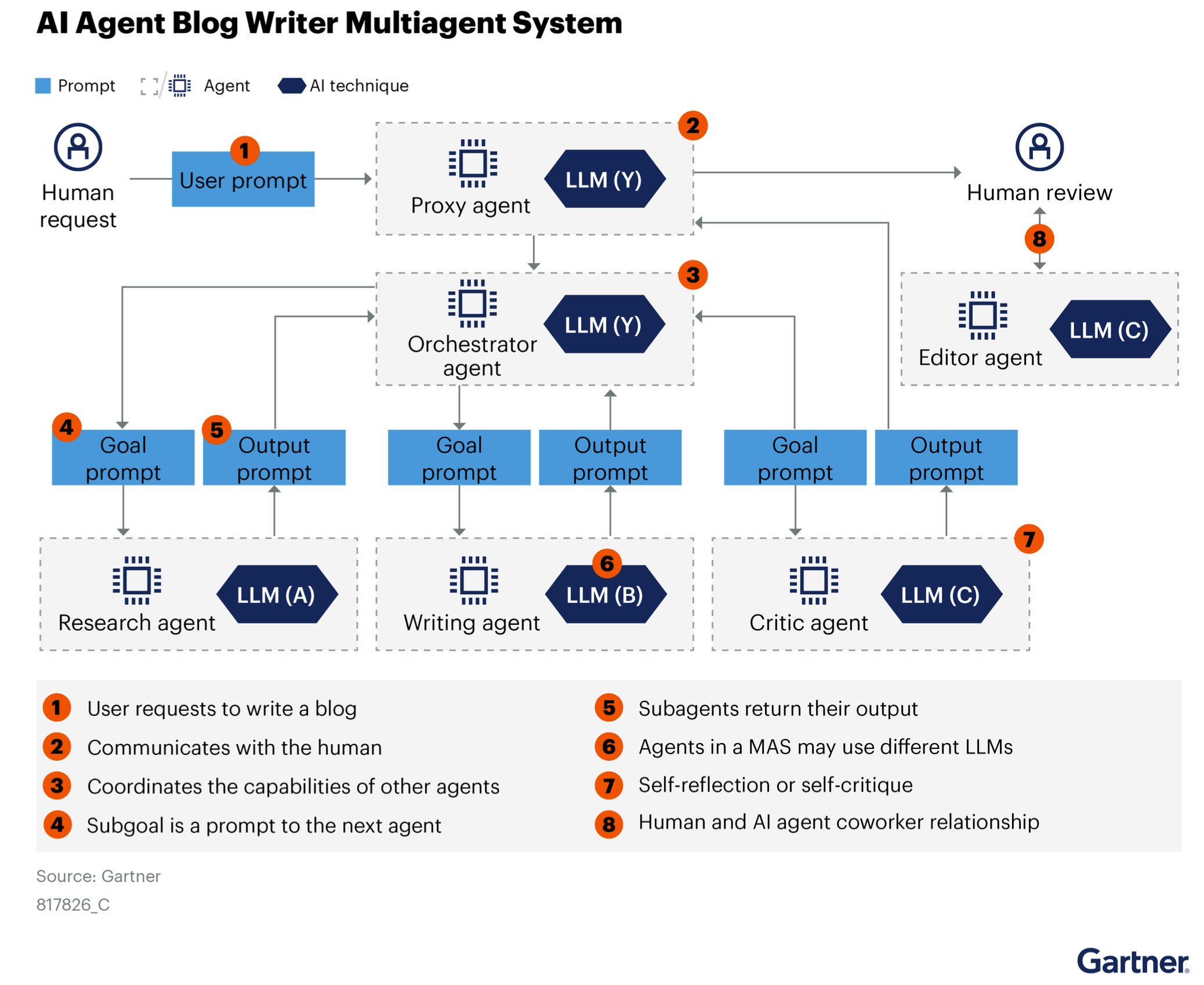

In a multiagent system, you’d have purpose-built agents – for writing, for researching, etc. I saw a pharmacy agent that all it did was look up your pharmaceutical history. It’s just easier if you split the tasks out. Here’s another example. Say I build the perfect credit card agent. I can then use it in multiple workflows. But I don’t want to build a single agent that answers shipping questions, product questions, policy questions, while also trying to be the orchestrator [agent] all at the same time. It makes more sense to have an orchestrator agent that says, ‘Oh, this is what you want’ – whether it's from a human or another agent – ‘so I'll direct you to the credit card agent.’”

Also, the better the data, the better the agent. We’ve seen some smaller companies that have been focused on helping corporations improve the quality of their data also enter the AI agent space, based on the idea that they really understand the data. You saw this in the early deployments of Gen AI – somebody would build a customer service chatbot based on customer service documentation that was 20 years old and contradictory. That made for a bad chatbot. I think the quality of the data and even maybe some pre reasoning on the data to drive AI agent behavior is something we’ll see emerge as a real trend.

NJ: How have AI assistants impacted productivity in the contact center and among desk workers?

Coshow: I would say all the interest around AI agents is largely driven by the lack of productivity we've seen from most Gen AI deployments. It is the CIOs’ dream that AI agents are actually going to achieve that increase in productivity because AI agents don't just advise, they take action.

I think a good AI assistant can make new contact center agents much more efficient, very quickly. They don't really help the expert contact center agent because that Gen AI assistant was built based on the contact center's best agents.

(Editor’s note: Check this out for more on generative AI and productivity.)

NJ: So what does productivity mean in the assistant world versus the agentic world?

Coshow: In the AI agent world, productivity is literally taking action and doing things that a human used to have to do, whereas the AI assistant is advising [the human worker] on what to do.

NJ: How is the cost of an AI agent being looked at? Is compared to the human doing that job?

Coshow: The cost of an AI agent compared to a human really depends on how many times the AI agent has to access a large language model and how many tokens are involved. So, it's always hard to compare to human activity. The cost of AI agents depends on which model they use. If they're accessing the latest, greatest, most expensive model, their cost might be significant compared to using a small language model that has been fine tuned to a specific domain. Those costs – of using a small language model – will be dramatically less.

One thing I would expect to see more this year is the use of small, fine-tuned language models. If you build your AI agent correctly, the system prompt tells the language model exactly what it needs back. If you have a small language model that is very capable, and your AI agent is giving it good information, like good instructions and good data, then the small language model should be good enough to drive AI agent behavior.

NJ: So what does an enterprise need to build an agentic AI platform? Right now it seems like it’s a choice between a platform approach – like what Salesforce and ServiceNow and others are doing – versus assembling different pieces in an ‘agentic framework’ on their own. Is that accurate?

Coshow: My feeling is that most enterprises are looking for some sort of platform, whether that is a smaller, independent platform, or if it has something to do with their hyperscaler. It'll probably be a mix.

I think big enterprises fear ending up with 30 different AI agent platforms, but maybe they don't want to be locked into one. So, right now they're comfortable with three or four.

The startup space for AI agent platforms is very active, and people are definitely looking at them. You have horizontal platforms where you can build any kind of agent you want, and then you have vertical platforms that are focused on a specific industry or even focused on a software platform itself.

NJ: What are your thoughts on guardrails to ensure the AI agent operates within specific guidelines?

Coshow: The key is giving the AI agent the correct instructions through its system prompt and not overloading it with too many choices. That's why you see multiagent systems becoming popular. For example, I know a large global company that built a generative AI assistant for North America. Everybody loved it, and so they added in Japan and Europe, and it started giving crazy answers. It gave those answers because they were making the large language model decide among 1,000 things instead of five. That's just bad design. An AI agent should be built for purpose.

NJ: What are some of risks or challenges associated with building an AI agent solution and deploying it?

Coshow: When people build their first agent, they're surprised at how easily it goes crazy. When you build your first two agent system, it's even worse. They're not as easy to build as often gets portrayed. And in multiagent systems, the agents have to talk to each other, and that communication needs to be perfect, because you don't want any degradation throughout the workflow because they're not talking to each other well.

NJ: How do you ensure that they talk well with one another?

Coshow: They might communicate in different ways, but let's just say they're prompting each other's API. You want to make sure that that prompt is perfectly organized for the next step of the workflow. You know the telephone game we played as kids? You don’t want to have a ‘telephone problem’ in a multiagent system.

NJ: This sounds like it ties into the concept of memory – what the AI agent itself “remembers” when it communicates with other agents. Could you go into that topic of memory?

Coshow: Usually, memory refers to short-term memory so that AI agents can execute their task in order – it's the short-term state memory of what's happening right now so the agent knows where it is and what it's doing.

But there is some confusion in the market about what memory means. Some consider memory to be a piece of information stored in a CRM or knowledge graph that drives agent behavior – that could be called long-term memory.

And then there is another idea of memory in which all the agent activity is stored ‘off to the side,’ and then that activity is analyzed in a way that helps us make the agents better – and there are people working on automated ways to do that, which gets you into an agent that learns. But this is a tricky topic, because when you get into robotics, like the Boston Dynamics dogs that walk, or in video gaming, they use reinforcement learning to make those systems better.

In the business world, I don't see any of that. Right now, memory in the business world either means some piece of information you're pulling out of the data, or the short-term memory that enables the agent to execute its task in order – or maybe memory that will be used to improve the agent.

And then there’s the idea that if you create an AI agent memory you might be able to use that information to fine tune a model and make the agent smarter. Or you might be able to use that information to adjust the system prompt of the agent to make it smarter, because you're looking at the results and you realize the system prompt needs to be adjusted. But, right now, there's different forms of [these ideas] and it's mostly talk.

Most platforms, even those provided by startups, have a panel that explains everything that the agent did. And if it's a multiagent system, you can see the communication among the agents, which often will show you exactly what's going wrong and what's going right.

NJ: Who writes the system prompts? Is it humans initially, and then that gets turned over to the agents to write their own prompts as they interact with other agents?

Coshow: The system prompt in each agent is written by humans to be very precise and tell it what to do, and, if they see it do something crazy, to tell it never to do that again. And remember, there's a difference here. There's a system prompt inside this agent [Coshow referred to the proxy agent in the diagram below; the “proxy agent” facilitates communication with the user or other agents], but when it prompts the orchestrator agent, that's different.

The prompt written for the orchestrator is important because it [governs] things like the temperature at which the LLM is set – how creative or factual you want it – what CRM can it call, what functions or what other tools it can access. In fact, these ‘system prompts’ exist in every agent and, just as with the orchestrator agent, these prompts are very important.

As for agents writing their own prompts, I’ve seen examples where people said that only humans would build system prompts but have since decided that while humans will write ‘version one’ of the prompt, an AI agent then uses an LLM to write subsequent prompts – and it writes a better version of the system prompt than the humans can.

[Editor’s note: In the Gartner research brief, Coshow defined the orchestrator as the agent that controls the workflow; the orchestrator is also aware of the capabilities of the other agents in the workflow, and it coordinates the efforts and may check the work of other agents. Coshow noted, as well, in reference to the following diagram that multiple LLMs can be used in the multiagent system.]

NJ: Are multiagent systems already here, or is that something that's going to develop throughout the course of this year?

Coshow: They are here, but they are going to become much more popular and much more common in 2025. I think we’ll also see testing centers, where I can run 5,000 examples through my agent flows and find out whether they passed or failed. Lastly, I think we’ll see more use of self-reflection.

[Editor’s note: Per the Gartner brief, a “common AI agent element is self-reflection or self-critique. In this workflow, the critic agent provides feedback on the note so that the human can view alternative content suggestions.”)

NJ: Is that the agent teaching itself?

Coshow: I wouldn't go that far. What I would say is that in a workflow there will be a self-reflection step that looks at the work that has been done and says there's something wrong with [what was done]. The work is then sent back to the orchestrator, along with the ‘notes’ of what it did right or wrong, to do again. If it comes back again with another below par response, the task gets sent to a human being. I think this year we'll see self-reflection used in multiagent workflows to track, double check and improve the work being done.

About the Author

You May Also Like