Decoding Dialogflow: What Makes a Good BotDecoding Dialogflow: What Makes a Good Bot

The second in a multi-article series focusing on building intelligent bots using Google Dialogflow and Contact Center AI

June 2, 2019

In part one of this series, we discussed conversational AI and described the principal capabilities Google Contact Center AI (CCAI) provides: Virtual Agent, Agent Assist, and Conversational Topic Modeler. We also illustrated how Dialogflow, Google’s natural language understanding engine within CCAI, works. In this article we focus on some of the characteristics that make for a good intelligent virtual agent or bot project.

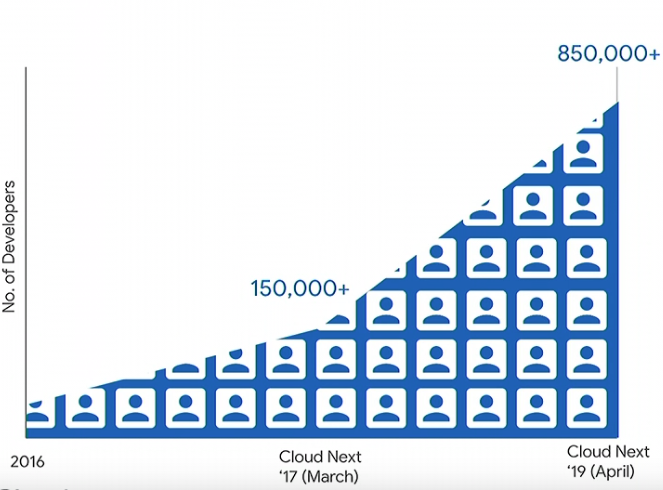

The tremendous interest in conversational AI is a global phenomenon. Google reports striking year-over-year growth in the number of developers who are using Dialogflow to create intelligent agents, with the number of account users surpassing 850,000 as of April.

With the growing importance in intelligent agents and conversational interfaces, it might be illuminating to consider, “What makes a good bot project?” And, perhaps just as enlightening, “What causes bots to fail?”

Characteristics of Good and Bad Bots

In an email exchange around what constitutes a good bot, Google suggested that “any use case where you interact with customers regularly to address questions or other needs” could be a candidate for an intelligent bot project. Within the scope of the contact center, this will include classic customer support services in which a significant percentage of the call volume corresponds to a recurring set of customer questions or situations. Having live agents respond over and over to the same frequently asked questions (FAQs) is one example of an activity that could potentially be automated to free up valuable live agent time.

Other examples include process-oriented support such as changing a password, transferring money, or placing an order for food items or tickets. Bots are even beginning to augment existing processes: In an unusual yin-yang contradictory twist, Google reports that it has successfully created bots that teach live agents how to better interact with customers.

What Makes a Good Bot

So, if intelligent bots are going to be so important in our future, what are some of the characteristics that go into designing a good bot? Here are some specifics:

1. A bot needs to do something useful for the customer and do it in a way that is easier than other alternatives. It should save time and reduce the effort a customer needs to expend to resolve her or his needs while interacting with an organization’s products or services. It then needs to get out of the way, meaning that the bot should be efficient, succinct, and fast.

2. A bot needs to communicate clearly to the customer what it can do. Just telling a customer he or she is conversing with a bot isn’t enough. The bot needs to state, in a succinct way, what its capabilities are.

3. A bot needs to reflect an organization’s brand and image. It should behave and act in support of the company’s overall corporate persona. The way messages are crafted, the words used, the tone of voice, the cadence, wit, and sass need to be designed so that the people using the bot are continuously exposed to the brand’s values during their engagements with the bot.

4. A bot should understand the user’s intent and the context.

Understanding intent involves a technical term called “conversation implicature.” The understanding we get from words is rarely literal or superficial; consequently, the bot needs to understand what we mean, not necessarily what we say. An example would be, “Pass the salt.” What we really mean in this phase is that someone wants to add salt to their meal as opposed to wanting to catch salt that is thrown or tossed to them.

In Dialogflow, “context” has a specific meaning: It’s the relevant information surrounding a user’s request that was not directly referenced in the person’s words. In a weather bot, for example, when you say, “How’s the weather?” the context around this request involves both location and time. The bot needs to know these two things to give the person a response. Hence, if not spoken directly, the bot can make an assumption about context such that the real intent is that the person wants today’s weather for the current location.

A context adjunct is that when possible the bot should be able to understand a customer’s journey. This implies that the bot may receive additional contextual information from backend systems. For example, it could know that the customer recently made a purchase with the company, or it could know that a customer is looking at a particular website page. Adding context data from the customer’s journey can then inform the bot and provide a better starting point for an interaction.

5. A bot needs to be designed using the “cooperative principle.” This principle relates to how people engage in effective conversation; it comprises four maxims:

Maxim of Quantity: The bot needs to provide as much information as necessary to advance the conversation, but no more information than is required.

Maxim of Quality: The bot needs to contribute true information and not bog the user down with things that may be false or for which the bot lacks evidence.

Maxim of Relevance: The bot’s response must be relevant within the context of the conversation.

Maxim of Manner: The bot must deliver clear responses that are easily understood.

6. A bot should be developed iteratively. The initial rollout should start simple, with frequent revisions until the bot is performing at a satisfactory level. Then drill deeper and add more features/capabilities. Good bots have performance metrics that a company can monitor continually, and a good bot is continually updated and maintained.

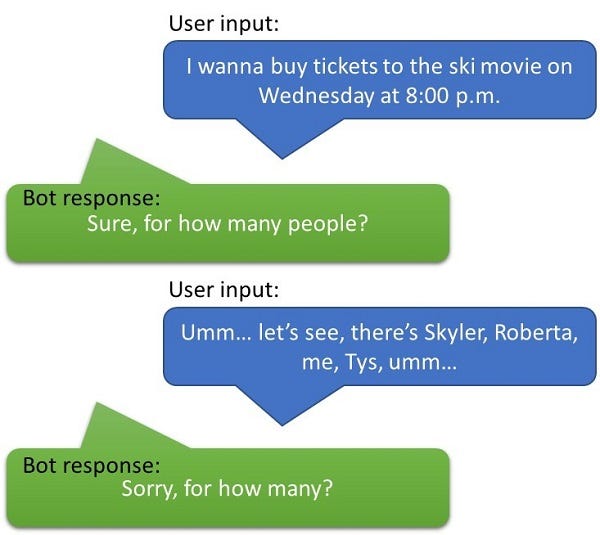

7. In human conversations, there are errors. A well-crafted bot knows how to keep the conversation going gracefully when a person makes a mistake in the conversation. Users may make three types of conversational errors when interacting with a bot:

No Input – the person hasn’t responded, or the bot hasn’t heard the user’s response.

No Match – the bot can’t understand or interpret the person’s response in context.

System Error – the bot is asked to do something that it isn’t capable of doing.

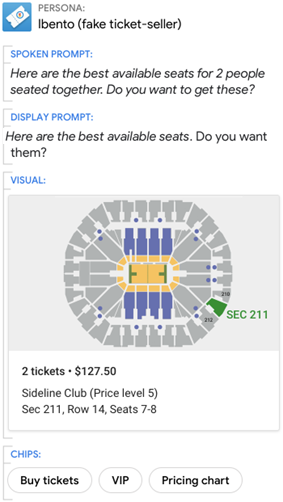

8. A bot should be designed for multimodal access, meaning it should work across device types. The bot should take advantage of the conversational and/or the visual capabilities of the person’s device. For example, if the bot detects a person who it’s interacting with can see images or click on options, it should use these capabilities in the interaction if doing so would help move the conversation forward.

Different kinds of conversational and visual interactions are listed in the table below (source: Google).

Click below to continue to Page 2: Bad Bot, Bad Bot

Bad Bot, Bad Bot

There are good bots, but there are also a lot of bad bots. More precisely, there are some bots that don’t work well due to poor design and execution in their creation and maintenance. Here are some common mistakes that end with a poorly constructed bot:

1. Building bots around use cases that aren’t that strong. There is nothing wrong with experimenting, but it’s better to build a bot that really fulfills a current business objective and meets the customer’s expectations.

2. Trying to build a “super bot.” Building a single bot that handles lots of different scenarios is really difficult, and if you try to build Siri-like, Google Assistant-like, Cortana-like, or Alexa-like bots, you’re likely to fail. Avoid scope creep and keep the bot’s focus narrow, particularly in early iterations.

3. The bot doesn’t provide enough guidance for the end users on what its capabilities are and what they can do with the voice or text interface, leaving the user to guess and make mistakes while trying to use the bot.

4. Failure to design for conversational errors. Bots that can’t gracefully handle user mistakes are likely to experience poor user adoption.

5. Lack of transparency. One of the golden rules of bot adoption is that the users need to be told up front that they’re communicating with a bot.

6. Making it too hard to get to a person. Many IVR and some bot solutions are intentionally designed to eliminate the possibility of ever reaching a person. If someone can’t get to a human reasonably easily, satisfaction with the bot is likely to be poor. Design human escalation protocols into the bot’s programming.

7. The bot isn’t programmed to understand context. In human conversations, knowing the context is critical to successful conversations. The same is true with bots… they need to be programmed to gather or infer context surrounding the user’s intent.

8. Poor integration. The bot may not be integrated with the right backend systems to meet the user’s needs.

9. Having the IT organization build the bot and then move on, offering no maintenance or post deployment retuning opportunities.

The Skill Sets Required to Build a Good Intelligent Virtual Agent

In our research, it has become clear that good bot projects will rely heavily on excellent conversational design. In fact, linguists and language analysts are key to these projects. In most intelligent bot projects, the team members have a mix of business and technical skills as follows:

Analysts, linguists, and conversational designers who will work with the data collected from conversations with live agents and from call logs to develop the conversational scenarios and sample dialogs that will be programmed into the Dialogflow agent. The importance of these people can’t be overstated.

Software engineers with experience in developing contact center solutions/IVRs and who have a basic understanding of how natural language understanding and machine learning work. These developers will need to understand how to work with Dialogflow and Google Cloud Platform as well as have experience in JSON, Python, or some other programming language, and how to interface with backend systems using APIs via webhooks functions.

Project managers who handle strategy, finance, project timelines, execution, stakeholders, communication, hiring, and so on.

Click below to continue to Page 3: Measuring Your Success

Metrics: Measuring Your Success

Two metrics are key when determining if a bot your team has created is successful:

Transaction completion rate

The user’s perspective

Transaction completion rate is relatively easy to obtain, while obtaining the user’s perspective is very difficult.

Transaction completion rate is a measure of the number of interactions the intelligent bot is able to automate as a percentage of the attempts it tries. This number is critical for determining the return on investment (ROI) an intelligent bot may provide. One way to think of bot ROI is to compare the burdened cost of doing customer support only with live agents in the contact center versus the cost for achieving the same support level using a mix of virtual and live agents. This difference in cost is the ROI when examined over a period of months or years.

Other measurable transaction-related statistics include the following:

Recognition accuracy – how well the bot translates spoken text into written words

Intent recognition accuracy – how often the bot correctly recognizes what the customer wants

Disposition – comparing measurements like customer retention rate or churn rate before and after the bot is put into service

Upsell rate – did deploying the bot provide more time for the live agents to upsell and are you seeing a significant improvement in upselling revenue?

Agent efficiency – are live agents more effective because the bot is able to collect introductory data, or perhaps the bot is able to suggest helpful information during the call?

The exact measures a bot-enabled contact center chooses to track will depend on the business case. Good practice suggests setting measurable success goals in advance of starting an intelligent bot project.

The customer perspective side of using intelligent bots is much more difficult to measure. The idea here is trying to determine how the customer feels when interacting with an automated virtual agent as opposed to working with a live agent. The backlash of a poor interaction with a live agent can sort of be measured by the sentiment analysis in the discussion with the agent. In a poor intelligent bot interaction, the person will simply disengage. This makes it harder to get a sense as to how people really feel about using the bot.

There are some indirect measures of satisfaction, however. One is watching the Net Promoter Score to see if it moves up or down after bot introduction.

Another indirect measure is watching to see which customers return to use the bot. If they come back, this implies (but does not necessarily prove) that you’ve developed a useful bot.

Dialogflow in Action

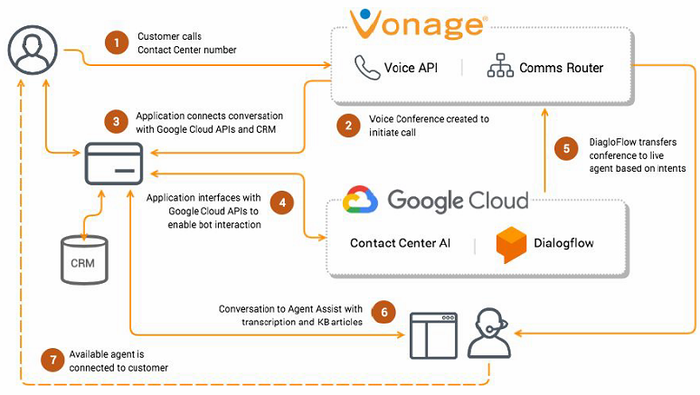

Vonage started working with Google about 18 months ago, and it was also one of the CCAI launch partners. Vonage is highlighting a proof of concept it has in process with its customer, American Financing (see related No Jitter article). American Financing is implementing Virtual Agent (essentially Dialogflow) and Agent Assist within the framework of Vonage’s customer contact center.

In this application, both the intelligent bot and Agent Assist are working off the same knowledge base containing unstructured data that has been fed into CCAI. During working hours, a customer can call in and connect to a highly trained agent who is supported by Agent Assist. However, after hours, American Financing has fewer agents on call. Customers calling in during this time can receive general information from the bot and are then helped by live agents, as needed. These agents rely heavily on the information Agent Assist provides them to offer reliable information to these after-hours callers.

What’s Next

The next article in this series will appear in late June, addressing the questions:

When would you consider building your intelligent bots using just Dialogflow without a contact center partner?

What do CCAI contact center partners or CCaaS partners offer that you don’t get using Dialogflow alone?

About the Author

You May Also Like