7 Ways to Make Your Google CCAI Deployment Successful7 Ways to Make Your Google CCAI Deployment Successful

Things to keep in mind as your enterprise looks to leverage artificial intelligence capabilities in the contact center

April 16, 2019

If you’ve been following industry news on No Jitter, you’ve undoubtedly heard about the rapid adoption of Google’s Contact Center AI (CCAI). At Enterprise Connect last month and Google’s Cloud Next ’19 event last week, there was a wave of announcements from systems integrators, contact software vendors, and developers declaring support for Google’s CCAI. CCAI and Google’s NLP solution Dialogflow are red-hot right now.

In fact, developer adoption of Dialogflow, Google’s development suite for creating conversational interfaces, has grown by nearly 600% in just two years, as Google product manager Shantanu Misra shared on stage at Next ’19. That alone is an incredible endorsement for just how valuable both AI-powered agent assistance and intelligent virtual agents are to businesses.

On No Jitter, Beth Shultz did a great job of summarizing the recent announcements and Sheila McGee-Smith wrote a detailed article describing the Google/Salesforce CCAI announcement and their work with Five9 and Hulu. So, we know that vendors are supporting CCAI and that enterprises are demanding the type of improved customer experience that it’s poised to offer. The next question for enterprises should be, “How can I deploy a solution that harnesses all of this powerful new technology and ensure that it solves real-world business problems for my customers?”

At Inference, it is our mission to build a platform for intelligent virtual agents that makes it easier for businesses to deploy self-service applications powered by conversational AI APIs provided by vendors like Google. We’ve learned that although conversational AI has made incredible gains in both quality and ease of deployment, it’s still by no means a solution that you just deploy and expect to work out of the box. I had the privilege of speaking on this topic with Google’s Adam Champy and Call Miner’s Jeff Gallino during an Enterprise Connect session led by Brent Kelly of KelCor. In this post, I’d like to share some of the things we’ve learned while working with our partners to build and deploy CCAI applications. I hope that these suggestions help you as you develop your own CCAI development and deployment plans.

1. The tools you decide to use will have a great influence on the team you'll need to succeed

With CCAI, the technology you need to build your applications is now available to you, as a service, in the Google Cloud. This includes Speech-to-Text, WaveNet Text-to-Speech, and Dialogflow. The quality of these API-driven solutions is superb because Google’s Neural Nets are being training by millions of consumers having millions of conversations with their Google Home and Android devices every day.

However, the platform is still an environment best suited for developers. One of the major benefits offered by modern cloud contact center platforms is the ability for non-technical users (e.g. call center managers) to manage operations without being dependent on IT. In a similar fashion, if you plan to build virtual agents, a software platform that offers a no-code, drag-and-drop solution for building virtual agent applications will also eliminate your dependence on professional services or dev teams to build, deploy, and modify applications. Building Dialogflow agents can be a challenge if you’re not a developer. A good virtual agent platform will enable you to bring your own virtual agents or use a set of pre-built Dialogflow agents.

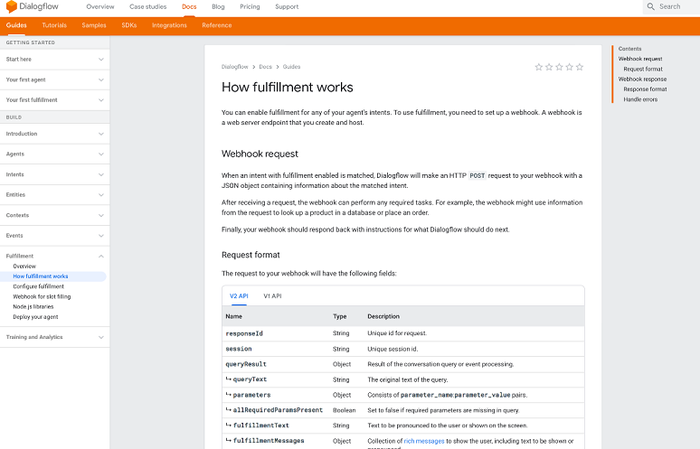

2. Fulfillment is one of the most important challenges

With CCAI and Dialogflow, you program the system to have a conversation with the user. During the course of the conversation, you may use text-to-speech to ask the user a question, speech recognition to transcribe the response into text, and Dialogflow to determine what the user is actually asking the virtual agent to do (also known as “intent matching”).

For example, the user may want to “book a hotel reservation in New York, next Wednesday evening.” In order to complete the transaction, you’ll need to update a back-end reservation system like Amadeus HotSOS. Without a virtual agent platform, this is done directly in Dialogflow, and as I explained in my first point, this will require someone who understands JSON and JavaScript programming.

3. Being ’on-net’ offers strong economic benefits

Consumers still overwhelmingly use the telephone to contact customer service, so a critical element of a CCAI deployment will likely include telephony. As Adam Champy pointed out at Next ‘19, enterprises purchase CCAI through a contact center partner like Cisco, 8x8, or Five9. You can also get access to CCAI through your telecommunications carrier. In many cases, an enterprise will purchase a package of minutes, based on a per-minute cost from an over-the-top (OTT) provider. They will be charged for calls coming into their call center (especially for toll-free numbers), and they’ll be charged for the leg of the call that connects to a live agent (especially if that agent is working from home or in a remote location).

These telephony charges can quickly add up. A common retail use case is for a retailer to accept an inbound call and enable the caller to have a conversation with a virtual agent. The caller may then ask to be connected to a local store (or department) to speak to a live sales rep. If the retailer has deployed the virtual agent “on-net” through their telecommunications carrier, then this transfer should be no different than transferring to a local extension. These types of transfers are typically free, as they are considered to be within the same enterprise. This can make a huge difference in the economics of the overall solution.

Continued on next page: Points four through seven

4. Most people have difficulty thinking beyond their highly informed context

When designing conversational applications, developers often build the solution with a set of pre-existing expectations. In order words, they expect users to say specific things in specific ways and to have a set of specific problems. In practice, users often say things in ways that the designer didn’t or couldn’t predict, so make sure that you include people in the design process with a variety of contexts and opinions. You’ll then want to make sure that you tune the application using real-world utterances from real callers. Remember that these are learning systems and that you need to let them learn.

5. Be careful in very proprietary voice domains

When using a streaming speech API, like Google’s, keep in mind that they have been trained not on your data, but on everyone’s data. What’s important to you may not be important to others. This can often lead to the speech recognition engine misinterpreting what the user said.

There are a couple of good solutions to this problem: 1) You could switch from open speech to a closed grammar (a good virtual agent platform will allow you to do this). The closed grammar will offer a domain-specific list of items to match against. For example, the caller might say “I need toner for my MFP M477fnw printer.” The closed grammar will ensure at that point in the dialog that the recognizer is expecting one of a domain-specific list of responses. 2) You can also now use Phrase Hints, which is supported by Google as well as some virtual agent platforms to greatly improve the likelihood that your proprietary vocabulary works reliably.

6. Allow the user to transfer… with context

Don’t lock your users inside of a virtual agent. Make sure they have the option to connect to a live agent. Many systems also allow you to detect anger and other emotions from the user as he or she speaks to the virtual agent. If this happens, get them to a live agent and make sure to provide your live agent contextual background of the user’s conversation with the automated system so they can tactfully diffuse the situation. In general, you should always make sure that when a conversation passes to a human, you give that person the benefit of understanding the previous conversation.

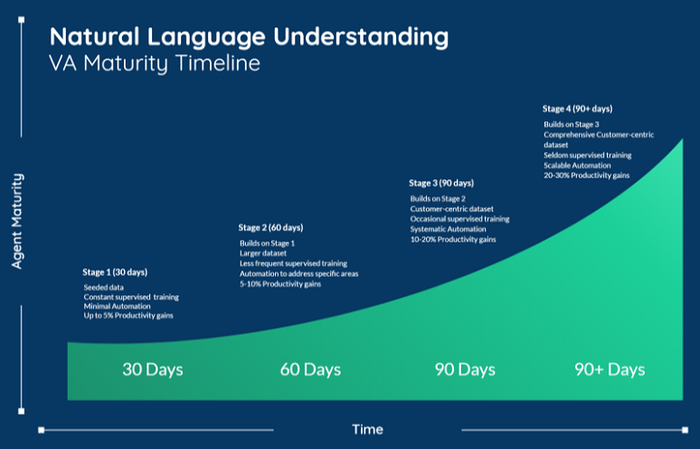

7. With natural language, deployment is not the end

Remember that a virtual agent that uses a natural language processing (NLP) engine like Dialogflow is not an IVR. It’s designed to learn and mature over time. Make sure that you plan for this. We typically see deployments follow a common maturity path:

30 days – During the first stage, you’ll invest in the foundations of natural language understanding (NLU). This involves providing relevant data and constant supervised training.

60 days – At this point, you’ll have established your training processes and determined what data you’ll need going forward to improve your NLU capabilities.

90+ days – This is a typical “crossover” point where the system begins to deliver real value in terms of NLU for your customers. This is the point where ROI becomes measurable.

90+ days – NLU automation becomes scalable outside of the initial scope of the project.

Conclusion

Google Contact Center AI is becoming widely adopted by both vendors and enterprises as a solution to assist live agents and improve self-service for customers. The question now for most enterprises is how to build and deploy solutions that harness its power with the least amount of effort, while providing the greatest return on investment. NLU and conversational AI are driving the next wave of call center innovation, and we’ll continue to deliver the tools necessary to take full advantage of these emerging technologies.